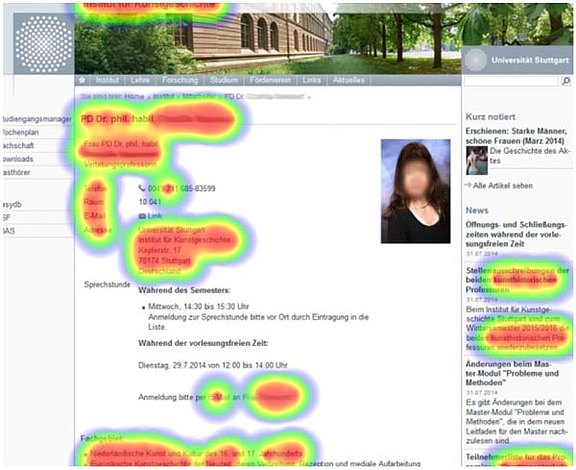

Beispiel Texterkennung

- Suche thematisch zusammenhängender Texte

- Supervised Learning/Unsupervised Learning

- Ergebnisse: Stichworte, Cluster von inhaltlich ähnlichen Texten

- Quellen: Produktbeschreibungen, Webseiten, Dokumentationen, Wartungsberichte u.s.w.

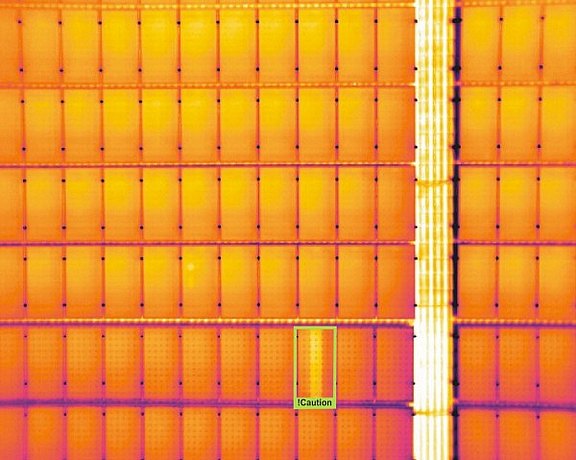

Beispiel Bilderkennung

- Analyse von Bildern/Videos

- Objekterkennung/Tracking/Zählungen

- Validierung von Objekteigenschaften

Wissen Sie, was in Ihren Daten steckt?

Keiner kennt Ihr Business besser als Sie selbst. Doch wie sieht es mit Ihren Daten aus? Ihre Daten-Assets sind im ständigen Wandel und tragen womöglich ungeahnte Potenziale für Prozessoptimierung, Produkte oder Dienstleistungen. Wir analysieren für Sie auf Basis Ihrer Anforderungen Ihre Daten.

Datenhandwerker und KI-Experten

Die Data-Scientists von mindUp sind Experten für schwierige Datenaufgaben und verfügen über Know-How im Bereich Data-Mining und Künstliche Intelligenz (KI) resp. Machine-Learning.

25 Jahre Erfahrung

in Data-Analytics

Maschinelles-Lernen

Künstliche-Intelligenz

Neuronale-Netze

Deep-Learning

Text-Mining

Data-Analytics braucht

robuste Werkzeuge

Einfache Integration in

die Geschäftsprozesse

Werkzeuge, die

optimal auf die

Anforderungen passen

Studien

Marktforschung

Business-Reports für

das Management

Data-Monitoring

Trendanalyse